The presence of redundant or irrelevant features in data mining may result in a mask of underlying patterns. Thus one often reduces the number of features by applying a feature selection technique. The objective of feature selection is to get a feature subset that has the best performance. This work proposes a new feature selection method using orthogonal filtering and nonlinear representation of data for an enhanced discrimination performance. An orthogonal filtering is implemented to remove unwanted variation of data. The proposed method adopts kernel principal component analysis, one of nonlinear kernel methods, to extract nonlinear characteristics of data and to reduce the dimensionality of data. The proposed feature selection method is based on the selection criterion of linear discriminant analysis in an environment of iterative backward feature elimination. The performance of the proposed method is compared with those of three different methods. The results showed that it outperforms the three methods. The use of filtering and a kernel method was shown to be a promising tool for an efficient feature selection.

A common problem in data analysis occurs when a large number of features or variables hinder our investigating some patterns present in data. They often are not all equally informative, and many possible features are considered in analyzing data because the relevant features are unknown a priori. The presence of redundant or irrelevant features may result in a mask of underlying patterns of data. Thus one often tries to reduce the number of features to be considered by applying some feature selection schemes (Akbaryan and Bishnoi, 2001 and Guyon and Elisseeff, 2003).

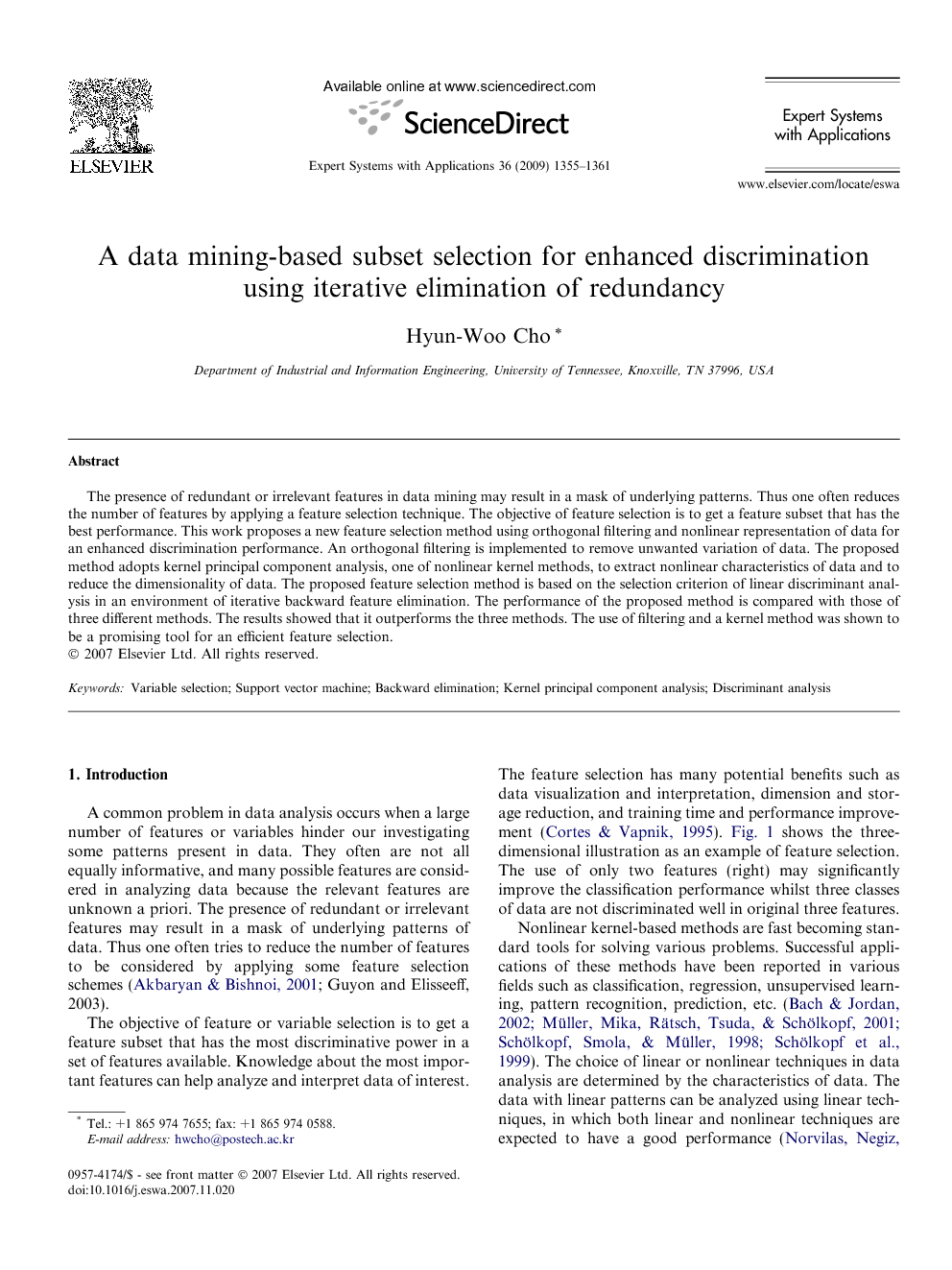

The objective of feature or variable selection is to get a feature subset that has the most discriminative power in a set of features available. Knowledge about the most important features can help analyze and interpret data of interest. The feature selection has many potential benefits such as data visualization and interpretation, dimension and storage reduction, and training time and performance improvement (Cortes & Vapnik, 1995). Fig. 1 shows the three-dimensional illustration as an example of feature selection. The use of only two features (right) may significantly improve the classification performance whilst three classes of data are not discriminated well in original three featuresNonlinear kernel-based methods are fast becoming standard tools for solving various problems. Successful applications of these methods have been reported in various fields such as classification, regression, unsupervised learning, pattern recognition, prediction, etc. (Bach and Jordan, 2002, Müller et al., 2001, Schölkopf et al., 1998 and Schölkopf et al., 1999). The choice of linear or nonlinear techniques in data analysis are determined by the characteristics of data. The data with linear patterns can be analyzed using linear techniques, in which both linear and nonlinear techniques are expected to have a good performance (Norvilas et al., 2000 and Qian et al., 2004). However, using linear techniques for nonlinear data will deteriorate analysis results.

In this respect, many powerful kernel methods have been developed and used successfully in many application areas: support vector machine (SVM), kernel principal component analysis (KPCA), kernel Fisher discriminant analysis (KFDA), kernel partial least squares (KPLS), and kernel independent component analysis (KICA) (Bach and Jordan, 2002, Baudat and Anouar, 2000, Cortes and Vapnik, 1995, Qin, 2003, Rosipal and Trejo, 2001 and Schölkopf et al., 1998). The basic idea of kernel methods is that input data are mapped into a kernel feature space by a nonlinear mapping function and then these mapped data are analyzed. Several researchers developed the feature selection methods based on support vector machines (SVM) (Guyon et al., 2002, Mao, 2004, Rakotomamonjy, 2003 and Weston et al., 2003). Guyon et al. (2002) proposed the SVM-recursive feature elimination (RFE) for selection of genes in micro-array data. The objective of SVM-RFE is to identify a subset of predetermined size of all features available for inclusion in the support vector classifier. Weston et al. (2001) developed a feature selection method that utilizes leave-one-out error rate through gradient descent methods. Rakotomamonjy (2003) also proposed various selection criteria (e.g., generalization error bound) in a SVM-RFE context.

This work proposes a new feature selection method for a classification. It employs orthogonal filtering and nonlinear representation of data as pre-treatment steps for an enhanced discrimination performance. An orthogonal filtering is needed to remove unwanted variation of data. The proposed method focuses on KPCA to extract nonlinear characteristics of data and to decrease high-dimensionality of data. The proposed feature selection scheme is executed with the selection criterion of linear discriminant analysis in an environment of iterative backward feature elimination. This paper is organized as follows. First, a review of SVM and kernel version of principal component analysis is given. Then the proposed method is presented, which is followed by a case study to demonstrate the proposed method. Finally, concluding remarks are given.